Nvidia has recently introduced two innovative personal AI supercomputers, the DGX Spark and the DGX Station, both powered by the cutting-edge Grace Blackwell platform. Announced on March 18, 2025, at GTC 2025, these systems aim to democratize access to data-center-grade AI capabilities, catering to AI developers, researchers, data scientists, and students. Designed to handle large AI models directly from a desktop, these machines offer a blend of power, portability, and seamless integration with Nvidia’s broader AI ecosystem.

DGX Spark: Compact Powerhouse with Retro Flair

The DGX Spark, formerly known as Project DIGITS, is a small form factor device—comparable in size to a Mac Mini—that packs an impressive punch. Equipped with the GB10 Grace Blackwell Superchip, it delivers up to 1 petaflop (1,000 TOPS) of AI performance. This makes it capable of tackling substantial AI workloads locally. Key specifications include:

- 128GB of LPDDR5X memory for fast data access.

- Up to 4TB of NVMe storage for ample space to store models and datasets.

- Runs on the Linux-based DGX OS, ensuring compatibility with developer workflows.

Priced at approximately $3,000, the DGX Spark is currently available for preorder, with shipping slated to begin in summer 2025. Beyond its technical prowess, the DGX Spark stands out with a smoked-gold 1970s-inspired design, adding a unique aesthetic appeal to its functional excellence, as noted by Boing Boing.

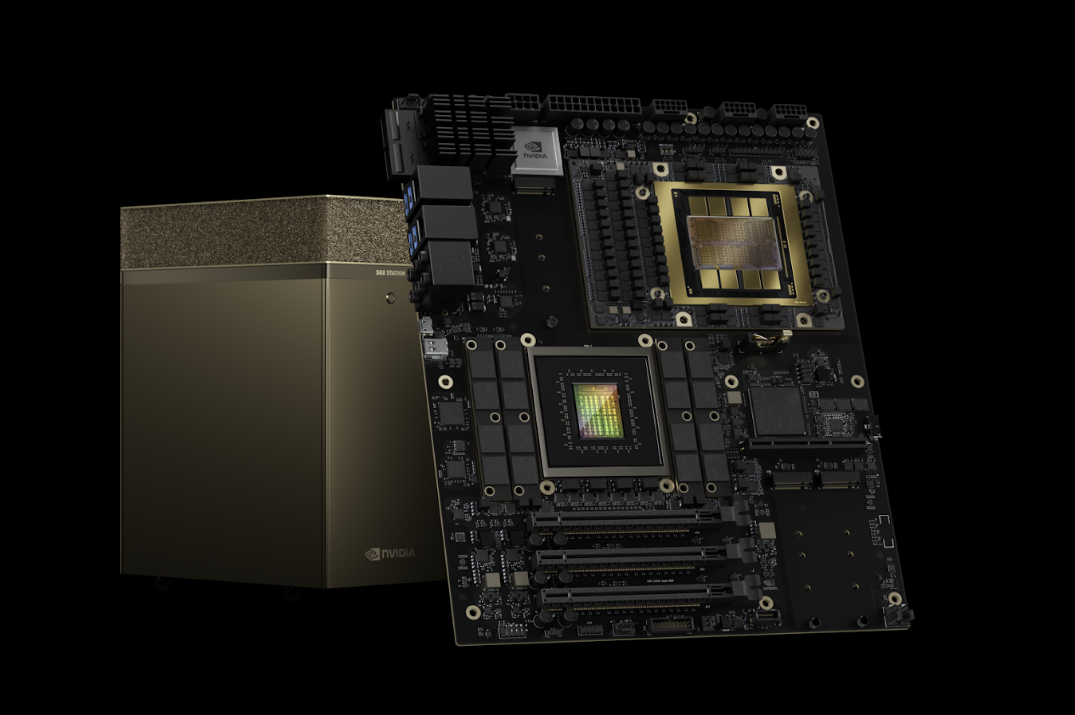

DGX Station: Server-Class Performance for Demanding Tasks

For users requiring even more computational muscle, the DGX Station steps up as a larger, server-class desktop solution. It features the Blackwell Ultra GPU, positioning it as a high-performance option for more intensive AI tasks. While specific pricing remains undisclosed, the DGX Station will be available later in 2025 through Nvidia’s manufacturing partners, including ASUS, BOXX, Dell, HP, Lambda, and Supermicro. This system is tailored for those pushing the boundaries of AI research and development, as highlighted in The Verge.

Versatility and Ecosystem Integration

Both the DGX Spark and DGX Station are engineered for flexibility. They can operate as standalone desktop AI labs, enabling local prototyping and fine-tuning of large AI models. Alternatively, they serve as “bridge systems”, facilitating a smooth transition of AI workloads from desktop to cloud infrastructures—such as Nvidia’s DGX Cloud**—with minimal code adjustments. This versatility is enhanced by their integration into Nvidia’s full-stack AI platform, which includes access to Nvidia NIM microservices through the Nvidia AI Enterprise software platform.

Technical Deep Dive: Grace Blackwell Architecture

The heart of both systems lies in Nvidia’s Grace Blackwell architecture, a leap forward in AI compute efficiency. The DGX Spark’s GB10 Superchip combines a high-performance CPU with GPU acceleration, leveraging LPDDR5X memory for low-latency data transfers at speeds exceeding 200GB/s. The DGX Station’s Blackwell Ultra GPU, meanwhile, offers server-grade capabilities with 784GB of HBM3 memory, enabling it to process massive datasets and trillion-parameter models with ease. This architecture optimizes power efficiency—crucial for desktop use—while delivering performance rivaling traditional data center setups, as detailed in Nvidia’s GTC keynote highlights.

Real-World Use Cases: From Research to Prototyping

These systems cater to a wide array of applications. For students and independent developers, the DGX Spark can run simulations, train generative AI models like those powering ChatGPT, or fine-tune computer vision algorithms—all locally, without cloud dependency. The DGX Station, with its superior capacity, suits research labs developing next-gen AI, such as autonomous vehicle systems or advanced natural language processing. Businesses can use either system to prototype AI solutions before scaling them via DGX Cloud, making them ideal for agile development cycles.

Design Philosophy: Power Meets Personality

Nvidia’s approach with these systems blends functionality with flair. The DGX Spark’s smoked-gold finish and compact size (roughly 6 x 6 x 2 inches) reflect a nod to retro tech nostalgia while ensuring it fits on any desk. The DGX Station, though larger, maintains a sleek, professional aesthetic suited for labs or offices. This design philosophy, as Nvidia’s CEO Jensen Huang emphasized at GTC, aims to make AI tools “personal and approachable,” breaking from the utilitarian mold of traditional server hardware, according to PCMag.

Ecosystem Benefits: Beyond the Hardware

Purchasing a DGX Spark or Station isn’t just about hardware—it’s an entry into Nvidia’s AI ecosystem. Users gain access to Nvidia NIM microservices, which simplify deploying AI models like LLMs or image recognition systems. The AI Enterprise software suite provides tools for model optimization, security, and enterprise-grade support. This integration reduces setup time and enhances productivity, positioning these systems as more than standalone devices but as gateways to Nvidia’s broader AI platform, a point underscored by The Verge.

Future Implications: Democratizing AI Innovation

The release of these personal supercomputers signals a shift in AI accessibility. By bringing data-center-grade compute power to desktops at prices starting at $3,000, Nvidia challenges the notion that advanced AI development requires massive infrastructure investments. This could accelerate innovation in fields like healthcare (e.g., drug discovery AI), education (e.g., personalized learning models), and small-scale startups, as noted in industry analyses from Forbes. Long-term, it may reshape how AI talent is cultivated and deployed globally.

A New Era of Accessible AI Computing

Nvidia’s launch of the DGX Spark and DGX Station marks a significant milestone in making advanced AI computing more accessible. The DGX Spark, with its compact design and affordable price point, targets individual developers and small teams, while the DGX Station caters to those needing server-grade power. Together, they expand the reach of Nvidia’s Grace Blackwell technology, empowering a wide range of users to innovate in AI development.

Whether you’re a student tinkering with AI models or a researcher scaling complex algorithms, these personal supercomputers offer a compelling blend of performance and practicality—right from your desktop. For more details, check out Nvidia’s official announcement on their Newsroom page.

Latest Top 10 FAQs About DGX Spark and DGX Station (March 2025)

As of March 19, 2025, here are answers to the most frequently asked questions about Nvidia’s new personal AI supercomputers, based on the GTC 2025 announcement:

- What are the DGX Spark and DGX Station?

The DGX Spark and DGX Station are personal AI supercomputers powered by Nvidia’s Grace Blackwell platform, designed for AI developers, researchers, and students to prototype and run large AI models on desktops. - How much does the DGX Spark cost?

The DGX Spark starts at around $3,000, with Nvidia’s 4TB Founders Edition priced at $3,999. Pricing may vary based on configurations from partners like ASUS. - When will these systems be available?

DGX Spark preorders are open now, with shipping expected in summer 2025. The DGX Station will be available later in 2025 through manufacturing partners. - What’s the difference between DGX Spark and DGX Station?

The DGX Spark is a compact system with the GB10 Superchip and 1 petaflop of performance, while the DGX Station is a larger, more powerful system with the Blackwell Ultra GPU and 784GB of memory for heavier workloads. - Can they run games or regular PC software?

No, both systems run Nvidia’s DGX OS (a custom Ubuntu Linux), optimized for AI tasks, not gaming or typical desktop applications. - What kind of AI models can they handle?

The DGX Spark supports models up to 200 billion parameters (e.g., GPT-3 scale), while the DGX Station can handle even larger models due to its greater memory and compute power. - Do I need a data center to use them?

No, both are standalone desktop systems, though they can connect to Nvidia’s DGX Cloud or other infrastructures for expanded capabilities. - Who is building these systems?

Nvidia partners with ASUS, Dell, HP, and Lenovo for the DGX Spark, and adds BOXX, Lambda, and Supermicro for the DGX Station. - What’s the power requirement?

The DGX Spark runs on a standard electrical socket, while specific power details for the DGX Station are TBD but likely higher due to its server-class design. - How do they integrate with Nvidia’s ecosystem?

They tie into Nvidia’s full-stack AI platform, including NIM microservices and the AI Enterprise suite, allowing seamless workflow transitions from desktop to cloud.